Docker-in-Docker-in-Docker

Docker, docker-compose, and SSH services in Docker

- 17 minsOutside of work, my main focus has been UBC Launch Pad’s Inertia project, of which I am now the project lead. We want to develop an in-house continuous deployment system to make deploying applications simple and painless, regardless of the hosting provider.

The idea is to provide a Heroku-like application without being restricted to Heroku’s servers - in other words, we wanted a simple, plug-and-play continuous deployment solution for any virtual private server (VPS). Since UBC Launch Pad frequently changes hosting providers based on available funding and sponsorship, quick redeployment was always a hastle.

The primary design goals of Inertia are to:

- minimise setup time for new projects

- maximimise compatibility across different client and VPS platforms

- offer a convenient interface for managing the deployed application

To achieve this, we decided early on to focus on supporting Docker projects - specifically docker-compose ones. Docker is a containerization platform that has blown up in popularity over the last few years, mostly thanks to its incredible ease of use. Docker-compose is a layer on top of the typical Dockerfiles you see - it is used to easily build and start up multiple containers at once using the command docker-compose up. This meant that we could let Docker handle the nitty gritty of building projects.

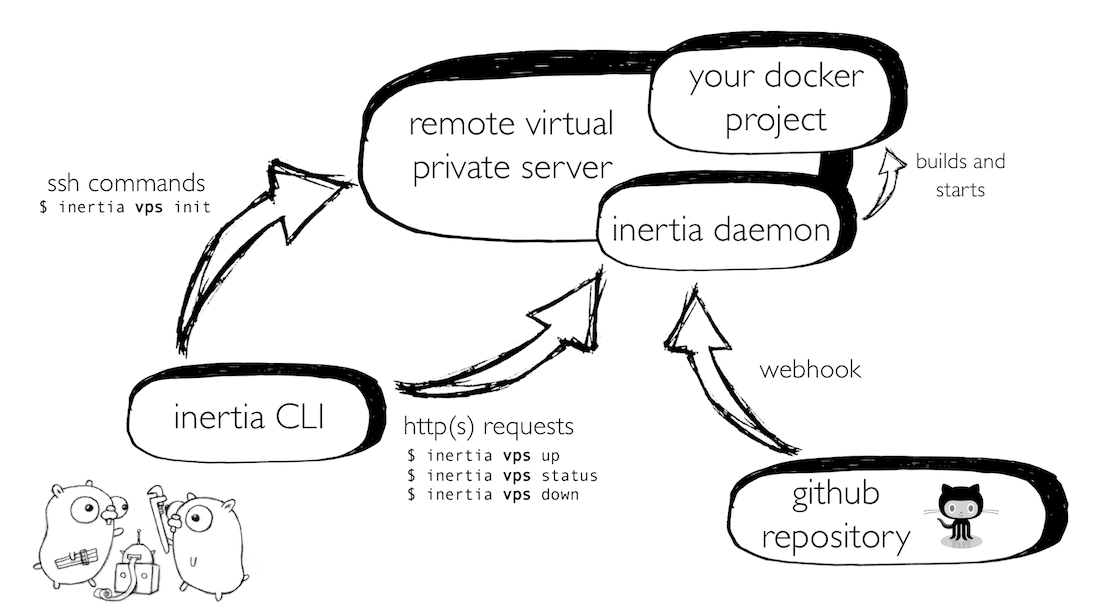

Architecturally, we decided to build Inertia in two components: a command line interface (CLI) and a serverside daemon.

The deployment daemon runs persistently in the background on the server, receiving webhook events from GitHub whenever new commits are pushed. The CLI provides an interface to adjust settings and manage the deployment - this is done through requests to the daemon, authenticated using JSON web tokens generated by the daemon. Remote configuration is stored locally in a per-project file that Inertia creates.

A slide from a presentation I gave about Inertia.

# Docker in Docker

There were a few options for how the Inertia daemon could be run on a VPS:

- compile and upload binaries beforehand, and have Inertia pull the correct binary for a given VPS

- install Go, clone the Inertia repository and start up the daemon from source

- build and maintain a Docker image on Docker Hub, and have Inertia pull the image

The first option was not ideal - not very platform-agnostic, and would require having a special place to host binaries that was not too easily user-accessible, since we don’t want the end user to accidentally use it (which ruled out using GitHub releases as a host). The second and third options were closer to what we wanted, and allowed us to lean on Go and Docker’s (likely) robust installation scripts to remove platform-specific difficulties from the equation.

We decided to go with the third option, since we had to install Docker anyway, which is itself a rather sizeable download - we wanted to avoid having too many dependencies. This meant that our daemon would have to be a Docker container with the permission to build and start other Docker images. One solution to this was to straight up install Docker again inside our container once it was started up.

This particular solution carries a number of unpleasant risks.

Fortunately, team member Chad came across an interesting alternative (also mentioned by the other post) that involved just a few extra steps when running our Inertia image to accomplish this:

- mount the host machine Docker socket onto the container - this granted access to the container, and through this socket, the container will be able to execute Docker commands on the host machine rather than just within itself.

- mount relevant host directories into the container - this includes things like SSH keys.

This practice, for the most part, seems discouraged, since it granted the Docker container considerable access to the host machine through the Docker socket, in contradiction to Docker’s design principles. And I can definitely understand why - the video in the link above demonstrates just how much power you have over the host machine if you can access the Docker-in-Docker container (effectively that of a root user, allowing you access to everything, even adding SSH users!). However, for our purposes, if someone does manage to SSH into a VPS to access the daemon container… then the VPS has already been compromised, and I guess that’s not really our problem.

Another disadvantage is that this container needs to be run as root. Hopefully we are trustworthy, because running a container as root grants an awfule lot of control to the container.

Implementation-wise, this setup was just a long docker run command:

sudo docker run -d --rm `# runs container detached, remove image when shut down` \

-p "$PORT":8081 `# exposes port on host machine for daemon to listen on` \

-v /var/run/docker.sock:/var/run/docker.sock `# mounts host's docker socket to the same place on the container` \

-v $HOME:/app/host `# mounts host's directories for container to use` \

-e DAEMON="true" `# env variable for inertia code - CLI and daemon share the same codebase` \

-e HOME=$HOME `# exports HOME variable to the container` \

-e SSH_KNOWN_HOSTS='/app/host/.ssh/known_hosts' `# points env variable to SSH keys` \

--name "$DAEMON_NAME" `# name our favourite daemon` \

ubclaunchpad/inertia

Nifty note: comments in multiline bash commands didn’t work the way I expected - I couldn’t just append a normal # my comment to the end of each line. The snippet above uses command substitution to sneak in some comments.

Anyway, by starting the daemon with these parameters, we can docker exec -it inertia-daemon sh into the container, install Docker and start up new containers on the host alongside our daemon rather than inside it. Awesome! This is already pretty close to what we wanted.

# docker-compose in Docker

With the daemon running with access to the Docker socket, we didn’t want to keep relying on bash commands. While it was inevitable that we would have to run SSH commands on the server from the client to set up the daemon, we wanted to avoid doing that to start project containers.

Docker is built on Go, and the moby (formerly Docker) project has a nice, developer-oriented API for the Docker engine - using this meant that we could avoid having to set up Docker all over again in the daemon’s container.

import (

docker "github.com/docker/docker/client"

"github.com/docker/docker/api/types"

"github.com/docker/docker/api/types/container"

"github.com/docker/docker/api/types/filters"

)

func example() {

cli, _ := docker.NewEnvClient()

defer cli.Close()

ctx := context.Background()

// Create and start a Docker container!

resp, _ := cli.ContainerCreate(ctx, /* ...params... */ )

_ = cli.ContainerStart(ctx, resp.ID, types.ContainerStartOptions{})

}

This functionality makes everything fairly straight-forward if you already have your Docker image ready and on hand. However, building images from a docker-compose project is an entirely different issue. Docker-compose is not a standard part of the Docker build tools, and that meant that Docker’s Golang client did not offer this functionality. There is an experimental library, which was an option, but the big bold “EXPERIMENTAL” warning made me feel a little uncomfortable - not to mention the fact that it didn’t look very well-maintained, and it didn’t support version 3 of the docker-compose.yml standard.

For a while it seemed that I would have to install docker-compose and run it from bash. Fortunately, after much head-scratching and wandering the web over the winter, I cam across a rather unexpected solution.

The idea came from the fact that, strangely, Docker offers a docker-compose image. Here’s what I came up with:

# Equivalent of 'docker-compose up --build'

docker run -d \

-v /var/run/docker.sock:/var/run/docker.sock `# mount the daemon's mounted docker socket` \

-v $HOME:/build `# mount directories (including user project)` \

-w="/build/project" `# working directory in project` \

docker/compose:1.18.0 up --build `# run official the docker-compose image!`

From the Daemon, I downloaded and launched the docker-compose image while granting it access to the host’s Docker socket and directories (where the cloned repository is). This started a Docker container alongside the Daemon container, which then started the user’s project containers alongside itself!

Dockerception - asking Docker to start a container (daemon), which starts another container (docker-compose), which then starts MORE containers (the user’s project). Whew.

Now that I knew this worked, doing it with the Golang Docker client was just a matter replicating the command:

cli, _ := docker.NewEnvClient()

defer cli.close(()

// Pull docker-compose image

dockerCompose := "docker/compose:1.18.0"

cli.ImagePull(context.Background(), dockerCompose, types.ImagePullOptions{})

// Build docker-compose and run the 'up --build' command

resp, err := cli.ContainerCreate(

ctx, &container.Config{

Image: dockerCompose,

WorkingDir: "/build/project",

Env: []string{"HOME=/build"},

Cmd: []string{"up", "--build"},

},

&container.HostConfig{

Binds: []string{ // set up mounts - equivalent of '-v'

"/var/run/docker.sock:/var/run/docker.sock",

os.Getenv("HOME") + ":/build",

},

}, nil, "docker-compose",

)

No docker-compose install necessary. While this initial approach seemed to work for simple projects, at NWHacks, when I attempted to deploy my team’s project using Inertia, it didn’t build - still investigating what the cause is there. Oh well. If once you fail, try, try again?

The work I have mentioned in this post so far (which laid the foundation for Inertia’s daemon functionality) can be seen in this massive pull request.

# SSH Services in Docker

Asking Docker to start a container to start a container to start more containers is nice and everything but… I wanted to go deeper.

The reason was testing. For a while most of the team tested Inertia on a shared Google Cloud VPS, but I really wanted a way to test locally, without going through the steps needed to deploy to a real VPS. At some point I simply ran the Inertia Daemon locally to test out my changes.

inertia init

inertia remote add local 0.0.0.0 -u robertlin

docker build -t inertia .

sudo docker run \

-p 8081:8081 \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /usr/bin/docker:/usr/bin/docker \

-v $HOME:/app/host \

-e SSH_KNOWN_HOSTS='/app/host/.ssh/known_hosts' \

-e HOME=$HOME --name="inertia-daemon" inertia

# ...

inertia local up # project deploys!

This was far from ideal - I was more or less testing if Inertia worked on MacOS (which I’m pretty sure is not a popular VPS platform) and we were unable to really test any of Inertia’s SSH functionalities or SSH key generation or Docker setup, to name a few. However, it did give Chad the idea to use Docker to simulate a VPS, and perhaps even launch it on Travis CI for instrumented tests.

So what we wanted, essentially, was to have Docker start a container (the mock VPS)… so that we could deploy a container (Inertia daemon)… to start a docker-compose container… to start test project containers.

To do this, a bit of setup outside of just pulling an Ubuntu Docker image had to be done - namely SSH setup. This guide from Docker helped me get started, and my initial Dockerfile.ubuntu was pretty much exactly the example in that post.

ARG VERSION

FROM ubuntu:${VERSION}

RUN apt-get update && apt-get install -y sudo && rm -rf /var/lib/apt/lists/*

RUN apt-get update && apt-get install -y openssh-server

RUN mkdir /var/run/sshd

RUN echo 'root:inertia' | chpasswd

RUN sed -i 's/PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

RUN sed 's@session\s*required\s*pam_loginuid.so@session optional pam_loginuid.so@g' -i /etc/pam.d/sshd

ENV NOTVISIBLE "in users profile"

RUN echo "export VISIBLE=now" >> /etc/profile

This first part is from the example and it pulls the requested Ubuntu image, downloads and installs SSH tools and sets up a user and password (root and inertia respectively), as well as some other things that still make no sense to me.

Inertia is too cool for passwords and instead relies on SSH keys, so I had to add this:

# Point configuration to authorized SSH keys

RUN echo "AuthorizedKeysFile %h/.ssh/authorized_keys" >> /etc/ssh/sshd_config

# Copy pre-generated test key to allow authorized keys

RUN mkdir $HOME/.ssh/

COPY . .

RUN cat test_key.pub >> $HOME/.ssh/authorized_keys

The first command writes my line ("AuthorizedKeysFile...") into the given file (/etc/ssh/sshd_config) and then copies the key (which I set up beforehand) into the container. This allows me to SSH into the service.

EXPOSE 0-9000

CMD ["/usr/sbin/sshd", "-D"]

Note that EXPOSE does NOT mean “publish”. It simply says that this container should be accessible through these ports, but to access it from the host, we’ll need to publish it on run. I opted to expose every port from 0 to 9000 to make it easier to change the ports I am publishing.

docker run --rm -d `# run in detached mode and remove when shut down` \

-p 22:22 `# port 22 is the standard port for SSH access` \

-p 80:80 `# arbitrary port that an app I used to present needed` \

-p 8000:8000 `# another arbitrary port for some Docker app` \

-p 8081:8081 `# port for the inertia daemon` \

--name testvps `# everyone deservers a name ` \

--privileged `# because this is a special container` \

ubuntuvps

To facilitate simpler building, a Makefile (which I use in every project nowadays):

SSH_PORT = 22

VERSION = latest

VPS_OS = ubuntu

test:

make testenv-$(VPS_OS) VERSION=$(VERSION)

go test $(PACKAGES) --cover

testenv:

docker stop testvps || true && docker rm testvps || true

docker build -f ./test_env/Dockerfile.$(VPS_OS) \

-t $(VPS_OS)vps \

--build-arg VERSION=$(VERSION) \

./test_env

bash ./test_env/startvps.sh $(SSH_PORT) ubuntuvps

This way you can change what VPS system to test against using arguments, for example make testenv VPS_OS=ubuntu VERSION=14.04.

And amazingly this worked! The VPS container can be treated just as you would treat a real VPS.

make testenv-ubuntu

# note the location of the key that is printed

cd /path/to/my/dockercompose/project

inertia init

inertia remote add local

# PEM file: inertia test key, User: 'root', Address: 0.0.0.0 (standard SSH port)

inertia local init

inertia remote status local

# Remote instance 'local' accepting requests at https://0.0.0.0:8081

I could even include instrumented tests and everything worked perfectly:

func TestInstrumentedBootstrap(t *testing.T) {

remote := getInstrumentedTestRemote()

session := NewSSHRunner(remote)

var writer bytes.Buffer

err := remote.Bootstrap(session, "testvps", &Config{Writer: &writer})

assert.Nil(t, err)

// Check if daemon is online following bootstrap

host := "https://" + remote.GetIPAndPort()

resp, err := http.Get(host)

assert.Nil(t, err)

assert.Equal(t, resp.StatusCode, http.StatusOK)

defer resp.Body.Close()

_, err = ioutil.ReadAll(resp.Body)

assert.Nil(t, err)

}

Frankly I was pretty surprised this worked so beautifully. The only knack was that specific ports had to be published for an app deployed to a container VPS to be accessible, but I didn’t think that was a big deal. I also set up a Dockerfile for CentOS which took quite a while and gave me many headaches - you can click the link to see it if you want.

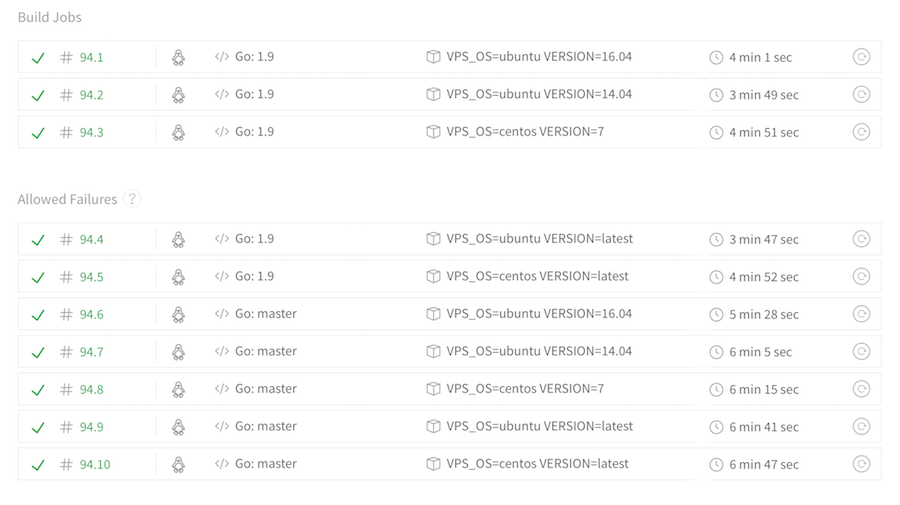

With all this set up, I could include it in our Travis builds to run instrumented tests on different target platforms:

services:

- docker

# Test different VPS platforms we want to support

env:

- VPS_OS=ubuntu VERSION=16.04

- VPS_OS=ubuntu VERSION=14.04

- VPS_OS=centos VERSION=7

- VPS_OS=ubuntu VERSION=latest

- VPS_OS=centos VERSION=latest

before_script:

# ... some stuff ...

# This will spin up a VPS for us. Travis does not allow use

# of Port 22, so map VPS container's SSH port to 69 instead.

- make testenv-"$VPS_OS" VERSION="$VERSION" SSH_PORT=69

script:

- go test -v -race

And now we can look all professional and stuff with all these Travis jobs that take forever!

# Random

Last Sunday I finally had a morning at home to relax, and at long last I got to see what my room looks like during the day.

I’ll need to eat more jam soon because I’m running a bit short on jars.